Part 3. Build your own Master Data Management solution

Fabien Arnaud , Data Architect

Keyrus Belgium

INTRODUCTION

Embarking on the journey to build your own Master Data Management (MDM) solution is not merely a venture into uncharted territory; it's a quest for lessons that resonate with experience. In the face of readily available off-the-shelf solutions from industry behemoths like Informatica, Stibo, Semarchy, SAP, or IBM, why choose the path less traveled?

Part 3 unveils a narrative woven with insights gained from the trenches of developing a bespoke MDM solution. Instead of dwelling on presumptions of superiority, we pivot toward the invaluable lessons learned throughout the process. What unfolds is a tapestry of challenges, triumphs, and unexpected discoveries that challenge the conventional wisdom of opting for established software.

Join us in this exploration, where we not only question the need to reinvent the wheel but also reflect on the wealth of knowledge gained in doing so. As we navigate the intricacies, we share the wisdom gleaned from the real-world nuances of building a customized MDM solution.

LESSONS LEARNED

Multi second runtime is too long

Being used to data warehouse environments, the dual entry point (frontend/backend) was probably the most complex challenge that I faced on this project.

Not only did I have to build a traditional batch ETL process for the daily ingestion of data from all source systems, but I also needed to cater for the interactions of data stewards with the MDM in real-time, such as entity matching and data enrichment.

Both of these entry points have very different requirements, since the first is a bulk insert/update process that runs once per day, while the second is required to run immediately upon a user’s request, at any moment, and most likely numerous times on the same day.

A batch ETL process typically runs overnight and can afford to take time, provided that it completes before the next business day. A user-triggered action however needs to be as instantaneous as possible. We can’t expect the user to wait for more than a few seconds for her/his action to be carried out!

As the amount of source records and business logic slowly increased over time, several reviews of the ETL logic were necessary to keep the runtime within reasonable limits. Among the improvements applied were:

- simplification of some SQL scripts to optimize the execution plan

- parallelization of steps that are not dependent on each other

- creation of missing database indexes

- optimization of the logic (e.g. applying filter as early as possible, keeping the strict minimum rows and columns for the job)

- limited upscaling of the Azure Function app service plan

The takeaway here is to thoroughly stress test your system, more importantly when runtime SLAs are demanding.

Data type

While the dynamic ETL design used in our project brought huge benefits in terms of flexibility, it also came with its share of challenges. Having a single piece of code to manage an unlimited number of tables is great but implies that column names are never explicitly mentioned. They are passed in as variables and therefore all follow the same transformation path. A string is however handled differently than a number or a date. Try inserting a blank value in a numeric field, or letters in a date field!

The built-in SQL Server metadata table INFORMATION_SCHEMA.COLUMNS was extremely valuable in this context, allowing us to dynamically look up the data type of each column and subsequently apply the appropriate transformations, such as setting the correct default value for each type of column.

Mind software limitations

As described under the “Technology” section, our MDM runs on a modern cloud architecture. Serverless solutions make it incredibly easy to deploy highly available, scalable applications. However, be aware of the limits when planning your architecture.

Software limitations forced us into some unexpected redesigns, luckily not involving dramatic changes:

- Inbound HTTP requests in Azure Logic Apps timed out after 235 seconds. This was enough for the frontend-triggered processes but not for the daily batch ETL jobs that refresh larger sets of data. For those, pointing directly to the Azure Function URL instead of going through the Logic App workflow solved the issue

- Azure Function deployment slots are not compatible with Logic Apps. There is no possibility to select the function’s deployment slot in Logic App and documentation states that it is currently not supported. We noticed that calling Azure Functions with deployment slots enabled from within a Logic App was resulting in an overload of the underlying Function App VM. We worked around this problem by switching from the built-in Function App action to the HTTP request action in Logic App. Since each deployment slot carries its own URL, the HTTP request action allowed us to target the exact function’s deployment slot desired.

CONCLUSION

We knew that this project wouldn’t be easy. Building Your Own MDM solution is not a common practice.

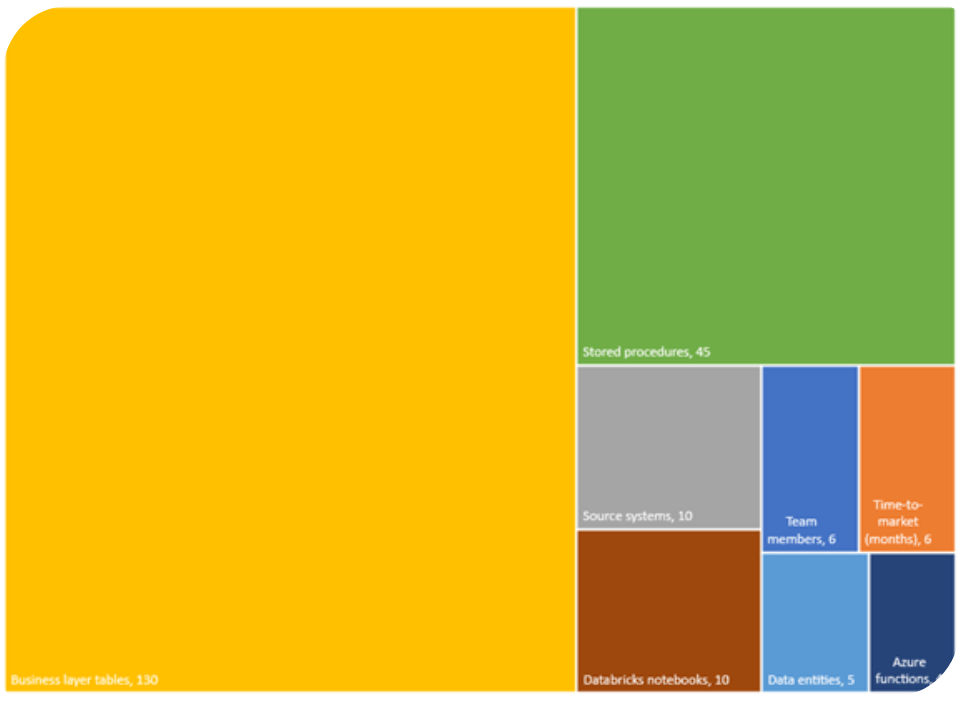

The first functional version was nevertheless achieved within 6 months, despite a continuous scope creep that led us to deliver a much more complete system than originally planned for this initial PoC:

- All 5 data entities in place

- Fuzzy matching algorithm added to automatically suggest the best matching candidates for every source record entering the MDM

- Data enrichment enabled to add extra flags and classifications, but also to override source values as needed

- Operational management of project entities from the MDM frontend

The project in figures:

Thank you for joining us on this three-part journey, where we guided you through the process of constructing a Master Data Management solution. If you have questions about the topic or are interested in discovering how we can contribute to your business, don't hesitate to get in touch the author of the article: fabien.arnaud@keyrus.com.

ABOUT KEYRUS

Keyrus is a leading data, digital, and business consulting firm that leverages its extensive business and technical expertise to transform data into insightful and valuable solutions.

We're on the hunt for individuals who are not just driven by ambition, but also by a genuine desire to push the world forward - as active contributors, not mere spectators. Our guiding principle, #MakeDataMatter, is embedded in the very fabric of our ethos. Explore our available roles here.