Part 2. Build your own Master Data Management solution

Fabien Arnaud , Data Architect

Keyrus Belgium

INTRODUCTION

Challenging the conventional wisdom

The audacity to forge a path less traveled often sparks curiosity, skepticism, and perhaps even a touch of incredulity. In Part 1, we dared to question the prevailing norm: Why construct a Master Data Management (MDM) solution from scratch when a plethora of off-the-shelf options promise efficiency, rapid deployment, and battle-tested stability? The giants of the market — Informatica, Stibo, Semarchy, SAP, IBM — loom large, offering turnkey solutions that seemingly render the endeavor of crafting one's own MDM solution a superfluous pursuit.

Yet, here we are, poised on the precipice of Part 2, unyielding in our pursuit of a different narrative. The journey to build your own MDM solution is not merely a technical odyssey; it's a philosophical exploration, challenging assumptions that conventionally favor expediency over craftsmanship. Are we, perhaps, too presumptuous in our belief that a bespoke solution can outshine established offerings? And if not, why the audacity to reinvent the wheel?

Join us as we delve deeper into the intricacies, challenges, and unique advantages of crafting a personalized MDM solution in Part 2 of our expert opinion series. Let's unravel the layers of this provocative decision and explore how it can redefine the landscape of data management.

DESIGN

Consistency

At the core of any MDM solution is of course data quality. The ultimate goal is to provide accurate information and this is done by keeping a single version of the data. Repeating information across data stores, tables or records increases the risk of having misalignments or deviating from the truth.

With a traditional data warehouse background, I’m used to star schemas where performance is favored over consistency. Here, a normalized model was applied instead for full data consistency. Every single data entity or attribute is only stored once and referred to by its surrogate key. This design makes it impossible to have the same element described differently.

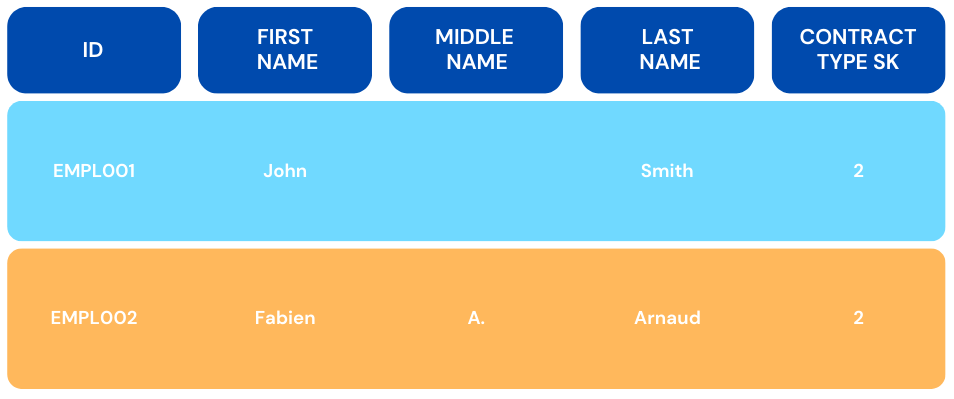

Below is an illustration of how resource records could be stored in a typical reporting data mart:

You will notice that the contract type description is repeated on each record to improve performance at the cost of data quality. Discrepancies can start to crawl into your data for various reasons: ETL bugs, technical failures, incorrect business processes, etc…

Here the “Contractor” type appears with 2 different spellings. Such a discrepancy can significantly complicate reporting. Business analysts have to get the data fixed at the source before reporting on it. Or be aware of it and remember to select both spellings in order to have a complete view of contractors, by using either a list of values or a word pattern instead of a single value filter. Forgetting to do so could result in wrong insights and lead to incorrect decisions.

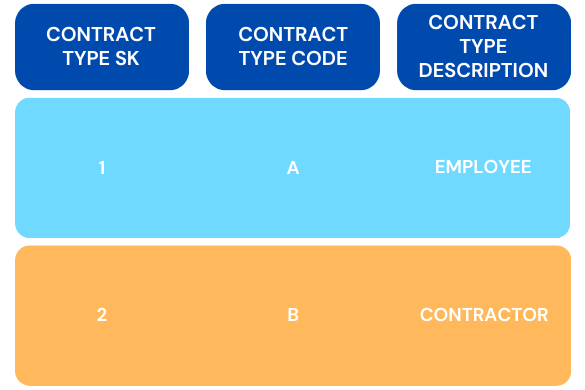

In our MDM design, the contract type attribute is managed in a separate reference table where each description is stored only once. In the resource table, the contract type is referred to by its surrogate key which has the value 2 in the below example:

If ever the “Contractor” description is updated, it will automatically reflect on every resource record linked to surrogate key 2, preventing any inconsistency.

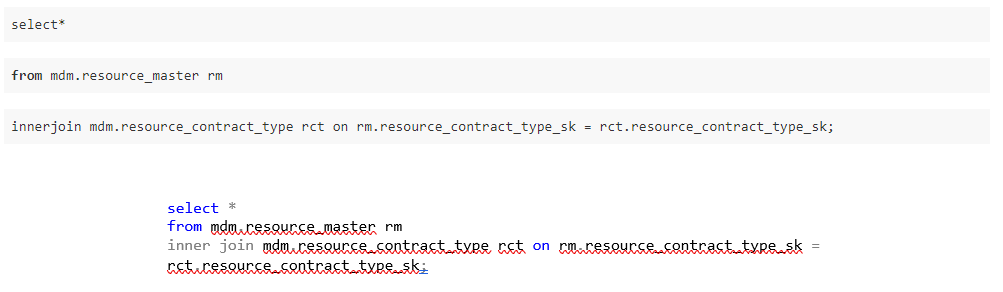

Performance is slightly impacted since retrieving the information forces us to join 2 tables:

But master data often ranges in the thousands of records, occasionally millions, hardly more. It is therefore perfectly fit for such a model.

SCALABILITY

Scalability was the second corner stone of our project. The initial scope was deliberately small to contain spend and increase the time to market. Nevertheless, the ability to add functionalities, integrate additional business applications and roll out to more legal entities had been foreseen since day one.

We started the journey with the smallest Azure SQL DB SKU (2vCores and the default 32GB for data storage), Azure Functions on a Consumption hosting plan and the entry-level Standard DS3v2 General Purpose Databricks cluster. This was enough to build a fully functional Proof of Concept.

When we started to connect additional source systems and offer new functionalities such as fuzzy matching, data enrichment, and linking, the increased load invited us to move to a 4vCores database and a P2v2 Azure Functions App Service Plan. At the effort of only a few clicks!

Azure Automation runbooks can be deployed to further exploit the power of scalability provided by the cloud. It can be used to scale the database according to the time of the day for example: scaling up during the day to support user activities and back down when the activity is low, or based on alerts triggered when the database exceeds a certain usage threshold.

FLEXIBILITY

The ability to adapt to a changing environment and evolving demands was not a direct business requirement but constituted a true added value to the project, and certainly a fundamental differentiator with most turnkey software.

Meta-driven framework

In a standard ETL environment, ingesting a new file would typically require the creation of a new dedicated ETL job, and sometimes actually several: 1 for each layer that the file needs to traverse in order to reach the final presentation layer. This affects the capacity to extend the system with new information, and makes it overly complex to maintain on the long run.

In our setup, the whole ETL logic is managed from a set of tables that store all the details used to process the data from the landing up to the final Golden Record information.

These metadata tables include information on:

- Batches & jobs

- Table structures

- Source to target mappings

- Flag conversions

- Landing filters

- Source files

- Environment configuration

Thanks to this Metadata-driven Framework, loading a new file into MDM only involves 3 simple steps:

- Creating the landing table in the database

- Adding a new entry in the job metadata table

- Describing the file structure in the source file table

You may have noticed that we do not actually create any new ETL job. The “dynamic ETL design” section coming up next will further expand on the generic stored procedures that were built to work hand in hand with the metadata.

No more than a handful of generic stored procedures is sufficient to handle the hundreds of MDM jobs defined for this project!

DYNAMIC ETL DESIGN

In a normalized model such as an MDM data store, data structures are simple and homogeneous.

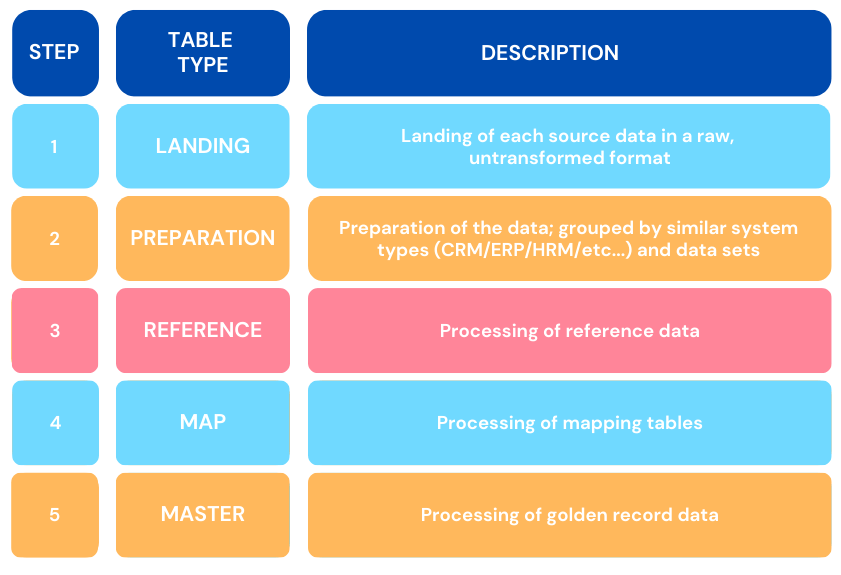

We defined for our project 5 types of tables, corresponding to the 5 steps that the data follows from the raw data up to the final golden record tables.

The steps above form a funneling approach that allows an easy integration of new systems with very limited redesign involved. As explained in the “Metadata-driven Framework”, new sources only require new landing tables and a little bit of metadata configuration. Data then flows through the next steps automatically unless the source is a new system type or includes new reference data sets.

The diagram below illustrates this data flow:

At the top of the diagram are the numerous landing tables, one for each data set from each source system. This is step 1.

Data of similar source system types are merged into the preparation layer (step 2). Whether 1, 2, or more ERPs are connected to the MDM, the resource data of each of them will be funneled into 1 single ERP resource preparation table. By doing so, only the landing layer is impacted when new systems are plugged to the MDM solution. All the layers downstream remain untouched!

Step 3 involves reference tables. These are populated by the system of record(s) previously defined in the metadata. The diagram indicates that HRM contract-type data was deemed clean enough to be used as MDM reference data. Based on the example given under the chapter on “consistency”, we can imagine our contract type table with only 2 records: “Employee” and “Contractor”.

Reference map tables are used to map source information to the harmonized values stored in the related reference table. This step 4 gives us the possibility of mapping for example both “Contractors” and “Contractor” values to our reference value “Contractor”.

When reaching the master table where Golden Records are presented (step 5), all contract-type variations are harmonized according to the mapping performed in the previous step. Reporting is thus made much easier since we now know that there can only be 1 spelling for each data value of an attribute.

Each type of table is governed by a strict naming convention and data structure design. This may sound over restrictive for some but provides the great advantage of managing the load of any number of table with just 1 generic stored procedure per table type. As I write this article, our MDM solution holds 550 tables, supported by only 5 generic stored procedures!

Adding new data sets is literally a matter of minutes!

ETL RULES CONFIGURABILITY

With the above design in mind, we then wondered how we could best expose the logic behind the generation of the Golden Records to the data stewards. We didn’t want the MDM to be a black box, and its final deliverables - the Golden Records - to be obscure elements that everyone would struggle to understand. For this, we had to address the 2 questions below:

- How could the business see what makes up a Golden Record?

- Ideally, how could the data steward be empowered with the ability to change the way Golden Records are generated?

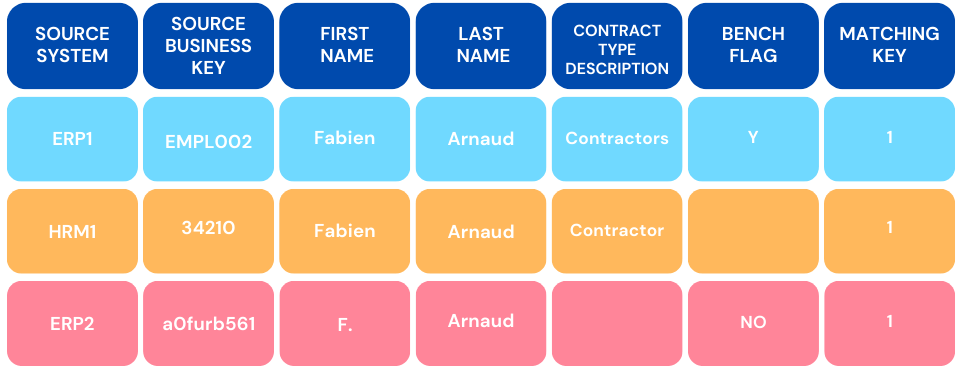

Imagine the following situation where the same employee is defined in several different business applications:

The 3 records must be merged into a single MDM Golden Record made up of the best-quality information.

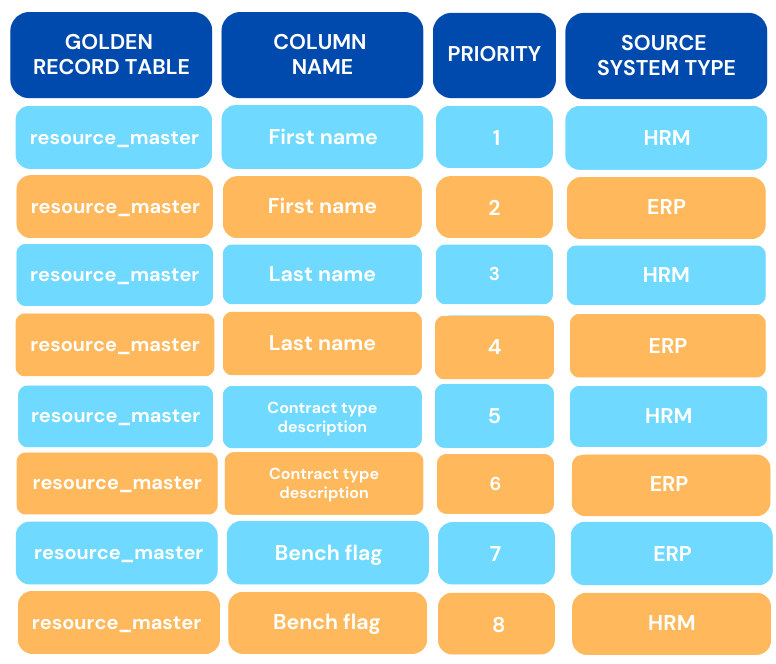

For the ETL to know where to take the information from, we created a new metadata table that defines priorities per attribute:

This table indicates that “First name”, “Last name” and “Contract type description” attributes are primarily sourced from the HRM system, and the “Bench flag” from the ERP. Should the value of any of these attributes be empty in the priority 1 system, the ETL will take the value from the next priority down. The example shows only 2 priorities for the sake of simplicity but our project uses up to 5.

Since we can have multiple ERPs and HRMs plugged to the MDM platform, sub-priorities have also been defined within each system type. An Azure Function reads the priority metadata each time the Golden Record process is started to assemble Golden Records accordingly.

For the data stewards to have full visibility on the rules that govern the Golden Record creation, this priority metadata table is exposed to them through a web app frontend. Additionally, the data stewards have the possibility of controlling the rules themselves by editing the table values if and when needed. Such changes are immediately applicable and take effect as soon as the Golden Record process is launched again.

In the upcoming Part 3 next week, we'll plunge even deeper into the heart of our exploration, delving extensively into the valuable lessons learned on the path to building your own Master Data Management solution.

Join us as we navigate through insights, challenges, and the wisdom gained from this compelling journey. Stay tuned for a deeper dive into the reservoir of knowledge awaiting you.

ABOUT KEYRUS

Keyrus is a leading data, digital, and business consulting firm that leverages its extensive business and technical expertise to transform data into insightful and valuable solutions.

We're on the hunt for individuals who are not just driven by ambition, but also by a genuine desire to push the world forward - as active contributors, not mere spectators. Our guiding principle, #MakeDataMatter, is embedded in the very fabric of our ethos. Explore our available roles here.